Despite the vast potential of artificial intelligence for use in higher education, one topic continues to dominate campus debates and conferences: academic integrity and assessment.

While integrity concerns are undeniably important, the default reaction sometimes resorts to developing policing or AI-detection strategies. Academics Thomas Corbin, Phillip Dawson and Danny Liu note in their 2025 paper, “Talk is cheap: why structural assessment changes are needed for a time of GenAI”, that many institutions default to discursive changes to assessment, such as enforcing traffic light systems that instruct students how they can or cannot use AI for the assessed task. This reactive stance often reflects a reluctance to challenge pre-AI assessment norms, and can skew assessment design towards policing, pushing learning into the background.

- Three ways to use ChatGPT to enhance students’ critical thinking in the classroom

- GenAI can help literature students think more critically

- ChatGPT and generative AI: 25 applications in teaching and assessment

Alternatively, Corbin et al’s paper presents the approach of structural changes to assessments, where “modifications that directly alter the nature, format or mechanics of how a [assessment] task must be completed”. Other tools, such as Mike Perkins of British University Vietnam’s AI Assessment Scale, provide useful guidance on how to help educators decide and openly communicate to students how much AI use is pedagogically appropriate in any given assessment.

In the age of AI, holistic instructional design must explicitly incorporate new complexities, such as human-AI collaboration dynamics, cognitive offloading risks and authentic engagement, in ways traditional holistic approaches have not. By focusing on assessment alone when considering the impact of AI, we are missing a pedagogical rationale to how we advise course and assessment design.

Diagnose with three AI-focused grids

To effectively navigate the intersection of AI with pedagogy, curriculum and assessment design, teachers need practical tools that offer clear, actionable insights. The Center for Education Innovation at my university is developing a practical set of diagnostic grids, designed to assist teachers in identifying strengths and vulnerabilities across multiple instructional dimensions, evaluating the integration of AI, and promoting authentic and comprehensive learning experiences.

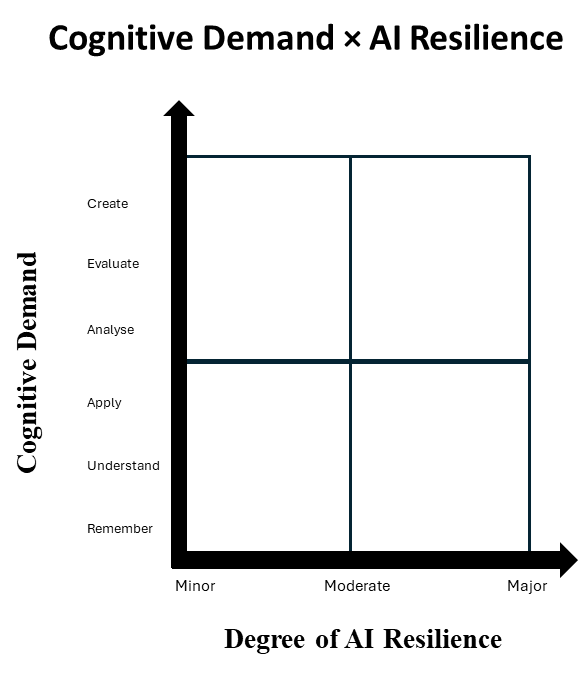

Grid 1: cognitive demand x AI resilience

The cognitive demand x AI resilience grid is designed to help visualise the cognitive demand of assessment and learning tasks and their resistance to generative AI completion. For example, simple quizzes that check for students’ understanding of a topic land in the low-demand, low-resilience quadrant, while complex tasks like live practicums populate high-demand, high-resilience quadrants.

This offers an instant diagnostic of whether an activity’s intended cognitive demand is matched by its resistance to easy AI completion. It helps teachers holistically align task design, pedagogy and curriculum so that authentic learning, not AI shortcuts, remains at the centre of the course.

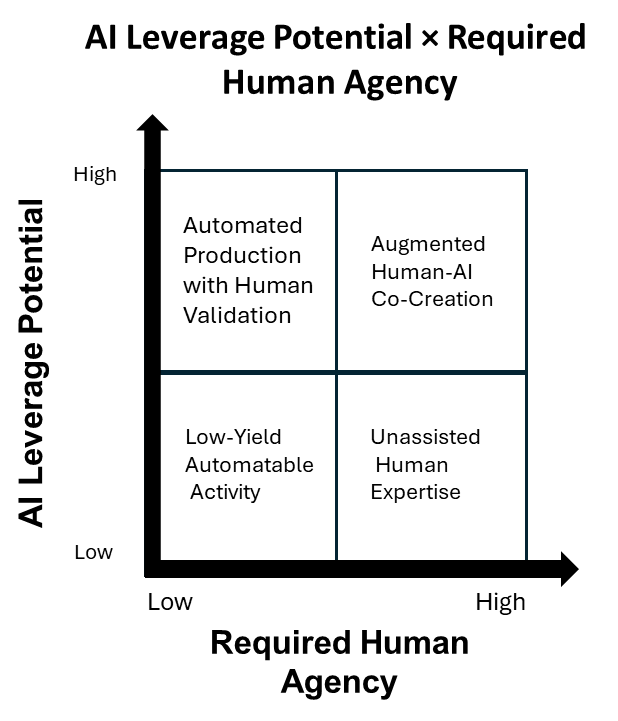

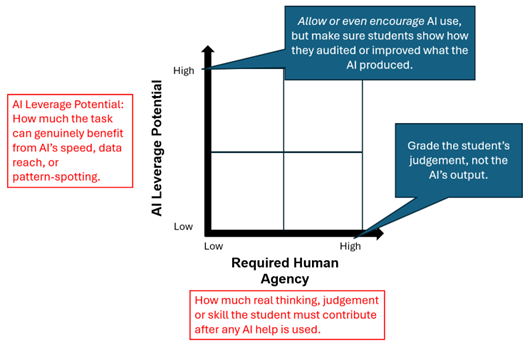

Grid 2: AI leverage potential x required human agency

Next, teachers need to determine the ideal division of labour between student and machine. The AI leverage potential x required human agency grid helps teachers strategically decide where AI adds value against the irreplaceable thinking students must still contribute. This is important for students to develop skills and knowledge for using AI to get jobs done, as they will in their professional lives.

The grid enables teachers to redesign course content, pedagogic approaches and assessment tasks that harness AI for efficiency or creativity, while protecting the core human judgements, skills and metacognitive work that matter most for deep, authentic learning. For example, tasks categorised as low-yield automatable will spark very little learning – discard or redesign them.

Conversely, augmented human-AI tasks should explicitly require students to demonstrate how AI enhanced their work.

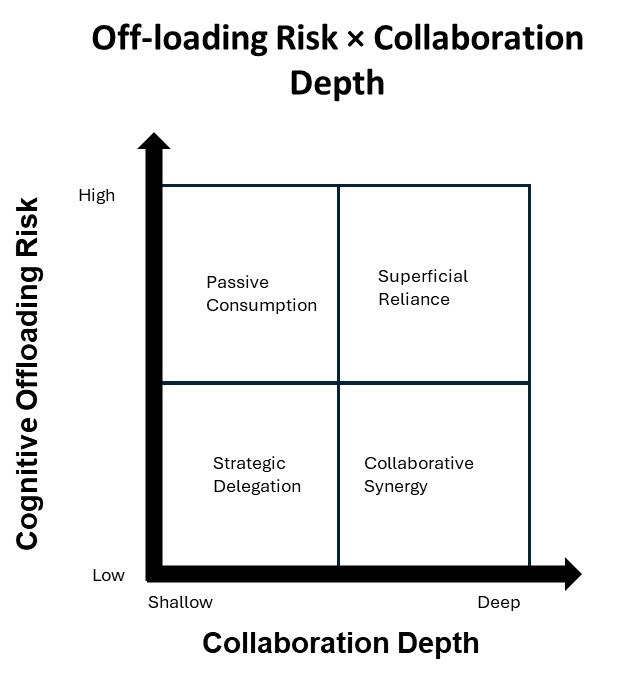

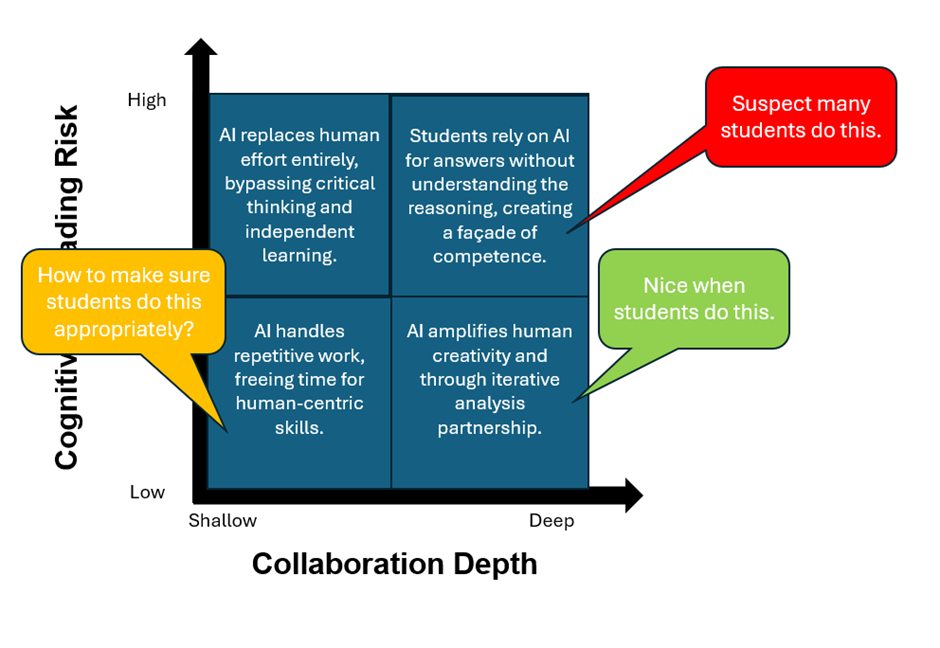

Grid 3: Offloading risk x collaboration depth

The offloading risk x collaboration depth grid helps teachers to determine potential student behaviours, ensuring courses genuinely engage learners rather than encouraging passive AI reliance. The “offloading risk” value measures the ease of cognitive offloading to the machine or AI substitution, while “collaboration depth” evaluates the quality of student-AI interaction, from superficial copy-pasting to deep iterative engagement. Effective course design nudges students towards thoughtful, iterative AI collaboration (when appropriate), promoting critical thinking rather than mere efficiency. We want students to avoid using AI like vending machines to complete learning tasks.

Why the grids matter holistically

Taken together, the three grids create a panoramic dashboard for course teams: Grid 1 flags where learning outcomes demand more authentic cognition, Grid 2 clarifies which parts of the syllabus should be AI‑enhanced versus human‑driven, and Grid 3 exposes behavioural pinch points that may call for extra scaffolds. In concert, they inform not only individual assessments but also the sequencing of activities, prerequisite skills and the balance of synchronous and asynchronous learning across an entire programme.

Sequenced in this way, the grids help you exploit AI where it accelerates routine work, safeguard authentic cognition and embed those choices directly into the assessment design. With this systemic view, curriculum and course designers can sequence AI‑related skills progressively across modules, ensure consistent messaging about acceptable AI use, and build in reflective checkpoints that cultivate students’ metacognitive capacity to collaborate responsibly with AI. They can also assist with structural changes to assessment design, helping both teachers and students gauge how much AI use is pedagogically appropriate in any given learning task.

Sean McMinn is director of the Center for Education Innovation at Hong Kong University of Science & Technology.

If you would like advice and insight from academics and university staff delivered direct to your inbox each week, sign up for the Campus newsletter.

comment